Search engine traffic is the key to building any profitable blog. But here’s the thing: if Google doesn’t index your site, you will NOT get even a single visitor.

If you want Google to rank your pages, you need to index your site first. Whether you are looking for Google to index your new site or blog post to quickly index your new blog posts, this post is for you.

The first thing you probably want to happen after launching a blog or website is getting it indexed by Google. Not indexing a site on Google is one of the biggest mistakes most new bloggers make.

If you are also one among them and want to index your site on Google really quickly, this tutorial is for you where you will discover few quick ways to index your new site on Google search results.

If you are in a hurry and want to know index blog posts in Google then Check out this illustration;

Note: To Index blog post in Google by using this procedure, first you need to verify your site in GSC, Check out the step-by-step guide below:

So let’s make it simpler and discuss how you can get Google to index your site really quickly.

How to Verify Your Site Ownership In Google Search Console (2025 Guide)

Table of Contents

The very first thing you need to remember is: that it’s free to index your new sites on Google. You don’t have to pay anything. But doing it for the first time is tricky.

1. Submit your website to Google

If you want to get Google to index your new site, you must submit your URL to Google from Google Search Console (GSC). For that, you need to create a free account on Webmaster tools using your Google account.

Here’s a simple step-by-step tutorial on how to submit your site to Google so it can start being indexed in search results.

Step 1: Click here to visit Google Search Console. Once you click on the link, you’ll be asked to select a property type.

Here’s how it looks like;

As you can see above, you’ll find two options. One is to enter a domain name or URL prefix.

For domain type, DNS (Domain Name Service) verification is required whereas, for URL prefix, you can find multiple verification methods.

Step 2: We recommend you to try their URL prefix as it allows you to verify your site’s ownership in multiple methods. Once you enter your site’s URL address, here’s what it shows.

As you can see above, it asks you to verify your ownership.

You can do it in multiple ways;

- By using your Google Analytics account

- Use your Google tag manager

- By associating a DNS record with Google

- Or by simply adding a meta tag to your site’s home page

Quick tip: If you’re using WordPress, install a plugin called RankMath SEO as it allows you to quickly connect your website to Google Search Console so you can track how Google is indexing your website.

To verify using the Rank Math SEO plugin, you just need the HTML code from your Google search console which looks like this;

Copy the entire code.

Now, simply go to Rank Math plugin Search Console settings.

Here’s how it looks like;

Paste the code in the Search Console field and click the “Authorize” button. That’s it, Google will verify your key and Authorize your account.

Alternatively, you can also submit your site to Google using the following method.

Once you have an account with GSC, log into it and you’ll see a search console page where you can add a new site by clicking on “Add a property”.

Here’s how it looks like;

Click ‘Add a property’ under the search drop-down (you can add up to 1000 properties to your Google Search Console account).

Verify your domain ownership by using the above recommended Method or Alternate Methods that are available:

Now, simply go to URL inspection section which looks like this;

Make sure to enter the specific post or page URL that you want for URL inspection.

As you can see above, whenever you want to quickly index and fetch as Google your individual pages again (usually when you update your old posts), you can easily do that from Google Search Console through URL inspection.

Read: How To Quickly Speed Up WordPress Site

2. Create an XML sitemap and Submit it to Google

An XML sitemap contains a list of all the website’s URLs such as blog posts and pages. Every website should have an XML sitemap because it maps out how the website is structured and what the website includes.

Search engine crawlers can easily find your site contents if you’ve an XML sitemap because a sitemap makes it easy for the crawlers to see what’s on your website and easily index it.

Here are the 2 most important things you need to do (after creating a website or blog).

- Create an XML sitemap for your site

- Submit your site’s XML sitemap to Google

Let’s talk about each one of them so you can understand better.

Create your site’s XML sitemap

There are a ton of ways to create an XML sitemap for your site including using tools like Screaming Frog, XML Sitemap Generator etc but if you’re using WordPress, it’s pretty easy.

There’s an incredible plugin you can use to easily create an XML sitemap.

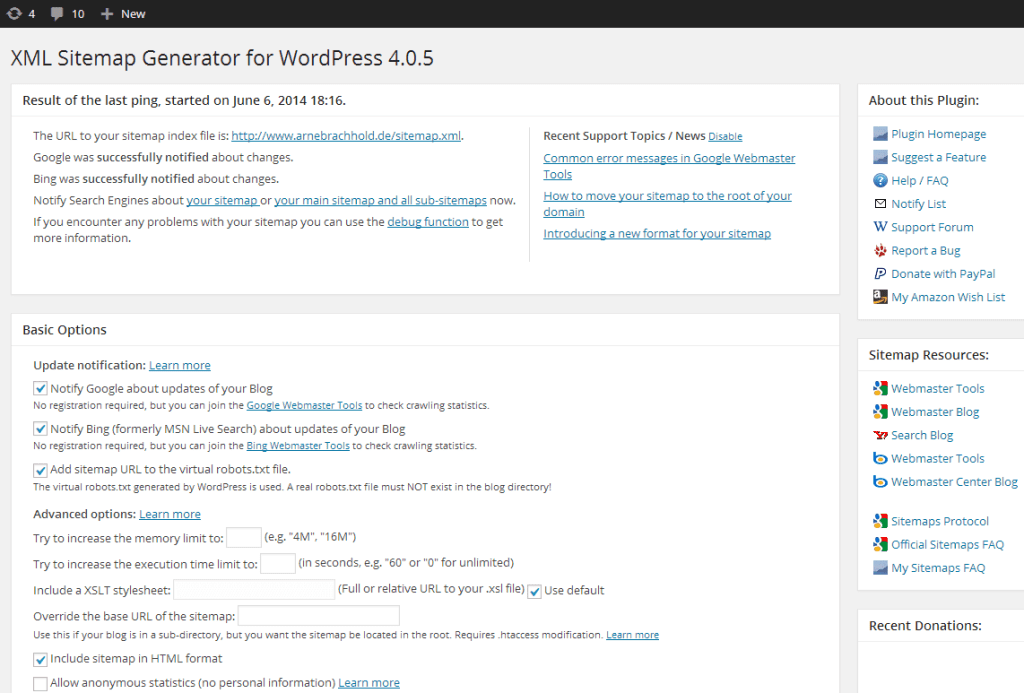

The plugin is called “Google XML Sitemaps”. It’s completely free to use and millions of people use it worldwide for creating sitemaps. It supports all kinds of WordPress generated pages as well as custom URLs and creates XML sitemaps to help search engines like Google, Bing, Yahoo and Ask.com to better index your site.

Here’s how it looks like;

As you can see above, you don’t have to do anything extra as it works out of the box. Just install and activate the plugin.

Quick note: If you want, you can open the plugin configuration page, which is located under Settings > XML-Sitemap and customize settings like priorities. The plugin will automatically update your sitemap of you publish a post, so there is nothing more to do.

Submit your XML sitemap to Google

To submit your XML sitemap to Google, you need to visit Google Search Console, select your website and click on “Sitemaps” option which looks like this;

Add your Sitemap URL (Ex:sitemap.xml) and click on the submit button, you’re done. Google will crawl your sitemap and start crawling and indexing all the links listed in the submitted sitemap file.

3. Create a robots.txt file

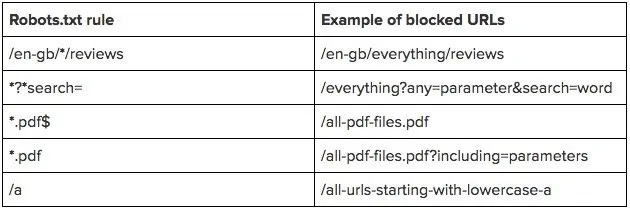

A robots.txt is a text file on your website that instructs search engines like Google and Bing on what to index and what not to index within your website. That means, you can also instruct Google what to crawl and what to avoid (if you don’t want to index specific pages on your site).

Here’s how an example of robots.txt file looks like;

As you can see above, you can block URL such as reviews, search elements, PDF files etc from your website in appearing on Google search results.

The good news is you can use almost any text editor to create a robots.txt file for your website (but the text editor should be able to create standard UTF-8 text files).

We HIGHLY recommend adding a robots.txt file to your main domain and all subdomains on your website.

Google has a helpful robots.txt resource for creating a robots.txt file.

A robots.txt file lives at the root of your site. So, for site www.example.com, the robots.txt file lives at www.example.com/robots.txt. robots.txt is a plain text file that follows the Robots Exclusion Standard.

Here is a simple robots.txt file with two rules, explained below:

# Group 1

User-agent: Googlebot

Disallow: /nogooglebot/

# Group 2

User-agent: *

Allow: /

Sitemap: https://www.example.com/sitemap.xml

Here’s the explanation:

The user agent named “Googlebot” crawler should not crawl the folder https://example.com/nogooglebot/ or any subdirectories.

All other user agents can access the entire site.

Here are a few things you want to know;

- User-agent (it is the bot the following rule applies to)

- Disallow (it is the URL path you want to block)

- Allow (it is the URL path within a blocked parent directory that you want to unblock)

The site’s Sitemap file is located at https://www.example.com/sitemap.xml

Here’s one more example on how to use it.

User-agent: *

Allow: /about/company/

Disallow: /about/

In the example above all search engines like Google and Bing are not allowed to access the /about/ directory, except for the sub-directory /about/company/.

Are you getting it? So basically the robots.txt file is there to tell search engine crawlers which URLs they should not visit on your website.

Important: Be sure to add your XML sitemap file to the robots.txt file. This will ensure that the search engine spiders can find your sitemap and easily index all of your site’s pages.

You can use this syntax:

Sitemap: https://www.example.com/sitemap.xml

- Once it’s done, save and upload your robots.txt file to the root directory of your site. For example, if your domain is www.example.com, you will place the file at www.example.com/robots.txt.

- Once the robots.txt file is in place, check the robots.txt file for any errors.

You can use tools like SEOptimer robtos.txt generator to easily generate the file for you with inputs of pages to be excluded.

4. Build some quality backlinks

Backlinks are extremely important. If you want your new site to rank well or quickly get indexed by Google search crawlers, you need links.

The more high-quality and relevant links your site has, the better it is for you to get faster rankings in search results.

Here are some of the incredible ways to build links to your new site.

Write high-quality guest posts: One of the evergreen ways to build high-quality and highly relevant links to your site is to write guest posts for other blogs in your niche.

We’ve written a huge guide on free guest posting websites where you can find over 250 sites to submit guest posts for free. You can write guest posts not only to build links but to build solid relationships with other bloggers, grow your network, increase your traffic, improve your brand online and so on.

Blogger outreach: Blogger outreach is the practice of reaching out to other bloggers to build relationships. Most people do it all wrong as they email other bloggers asking for links. No one links to your stuff if they don’t even know your name or brand.

That’s why you need to get strategic about blogger outreach. Firstly, build a rapport by commenting on their blogs, leaving positive reviews, buying their products, linking to their content and so on. They will also reciprocate sooner or later. You can read more about blogger outreach strategy to know how to do it right.

Create epic content: Creating link-worthy content takes time but it is always worth your efforts and money. No one links to mediocre content. If you’re creating 10x better content than your competitors, it’s easier to get incoming links.

Just make sure to analyse your competitors, find what’s working well for them, create evergreen content that beats your competitors content and you’ll find plenty of ways to get high quality links to your site. Use tools like Semrush to analyse your competitors!

6. Use blog directories to submit your site

One of the fastest ways to get your site indexed on Google search results is to submit your site to blog directories. Most blog directories usually allow you to submit your site’s content for free and they also give you traffic and links.

If you are wondering about what directories to use to submit your site, check out this high DA free blog submission sites list. It includes over 30 of the highest domain authority blog directory sites where you can submit your site for free.

Also make sure to create social media profiles for your newly launched website or blog.

Use sites like Pinterest, Facebook, Twitter, LinkedIn etc to create pages for your own sites and submit your new posts across those sites regularly.

Although all the links from social media sites are nofollow but because of their domain authority (usually over 80+) they help you index your new site on Google really fast.

How Does Google crawl and index your pages?

Whether you know it or not, Google crawls and processes billions of pages on the web.

Crawling: Google downloads text, images, and videos from pages, and this automated program is called crawlers.

Indexing: Google analyzes the collected data and stores it in Google Index which is a large database.

If new pages are within your website’s sitemap, Google will discover them and crawl those pages. Then, it ranks those pages by considering over 200 Google ranking factors including content, links, Rankbrain and so on.

Once this whole crawling process is complete, all of the results will start appearing in Google search and whenever you update new content, that content will be updated accordingly.

Here’s a simple illustration of how it works.

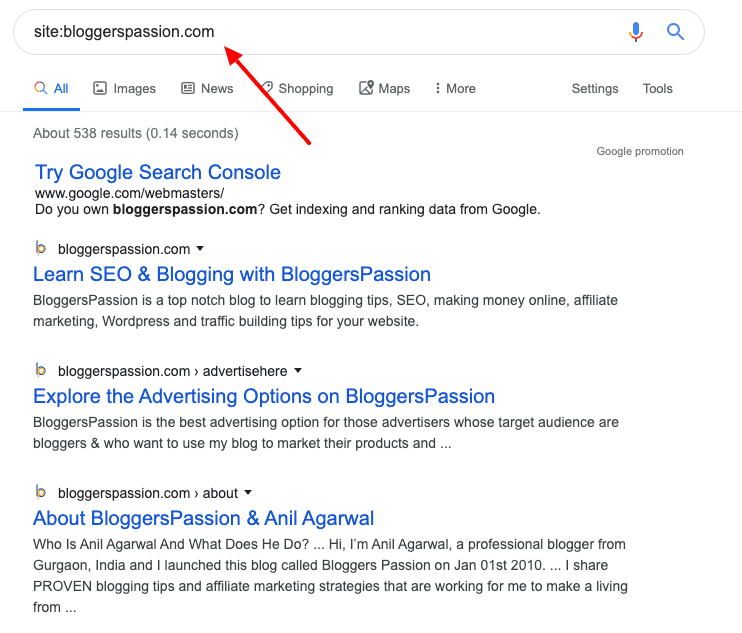

Do you want to check if a particular site is listed in Google search results?

Just type “Site:domainname.com.”

For example, here’s what is displayed in Google for our site BloggersPassion

As you can see above, our site is listed in Google search and over 500 pages are getting indexed in Google search results (including pages, blog posts etc).

Browse more SEO Tutorials:

Final Thoughts on Indexing Your New Site on Google

Search engine crawlers are really faster and smarter.

Pinging your site, using Google Search Console, building links from other sites, smart linking – all these things can be helpful. But if you don’t have a sitemap of your sites it gets really difficult for the search crawlers to crawl your site. The only way to get faster search rankings with your content is to make your site indexed on Google really fast.

Did you find this guide on getting Google to index your site quickly useful? Please consider sharing it with others. If you have any questions, do let us know in the comments below.

FAQs About Index Blog Post in Google

Here are some frequently asked questions around how to get Google to index your site.

Indexing your website or blog on Google has the following benefits.

→ You can make others quickly find your site and content

→ You can get more traffic organically

→ Get more conversions

→ You can increase your sales (if your site content gets indexed on Google instantly)

→ And the list goes on

The short answer is: within 24 hours. Google has spiders that crawl the search information all over the web. The more content you have on your website, the more time it usually takes to get indexed on Google.

Yes, you definitely should have an account in Google Search Console (GSC). You can create an account for yourself for free using your GMail account. Once you’ve access to GSC, you can start adding your site(s) to index and appear your site results in Google search.

To index a Blogger’s post on Google, you need to create an account and verify your site on Google Search Console. Then, you can easily index a single blog post.

![How to Get Google Star Ratings for Product Reviews [The PROVEN Ways]](https://bloggerspassion.com/wp-content/uploads/2019/02/star-ratings-for-product-reviews-on-google.webp)

Hi sir, creating an XML sitemap was definitely new for me as I am a new blogger. I will follow your tips and I am sure I will make my site do good in ranking. Thank you for sharing such a good information for new bloggers like me.

Nice Article sir. URL inspection thing is new for me. After reading this post. Now i am going to add manually these pages that not showing on Google and also hide there page that not need on Google using robots.tx right ?

Thanks sir. 🙂

Hi Pradip

You should use URL inspection feature whenever you face issues with content indexing. And should use robots.txt file to block URL’s you don’t want to get indexed in search engines.